– 합성곱 신경망을 위한 훈련

1. 레코드 불러오기 -> (패션 MNIST)

(x_train_all, y_train_all), (x_test, y_test) = tf.keras.datasets.fashion_mnist.load_data()

##출력:

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/train-labels-idx1-ubyte.gz

29515/29515 (==============================) - 0s 0us/step

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/train-images-idx3-ubyte.gz

26421880/26421880 (==============================) - 0s 0us/step

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/t10k-labels-idx1-ubyte.gz

5148/5148 (==============================) - 0s 0us/step

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/t10k-images-idx3-ubyte.gz

4422102/4422102 (==============================) - 0s 0us/step

2. 훈련 데이터 세트를 훈련 및 검증 데이터 세트로 분할

from sklearn.model_selection import train_test_split

x_train, x_val, y_train, y_val = train_test_split(x_train_all, y_train_all, stratify=y_train_all,

test_size=0.2, random_state=42)

3. 대상을 원-핫 인코딩으로 변환

y_train_encoded = tf.keras.utils.to_categorical(y_train)

y_val_encoded = tf.keras.utils.to_categorical(y_val)

4. 입력 데이터 준비

x_train = x_train.reshape(-1, 28, 28, 1)

x_val = x_val.reshape(-1, 28, 28, 1)

x_train.shape

##출력: (48000, 28, 28, 1)

5. 입력 데이터 표준화 전처리

x_train = x_train / 255

x_val = x_val / 255

6. 모델 교육

cn = ConvolutionNetwork(n_kernels=10, units=100, batch_size=128, learning_rate=0.01)

cn.fit(x_train, y_train_encoded,

x_val=x_val, y_val=y_val_encoded, epochs=20)

##출력:

/usr/local/lib/python3.8/dist-packages/keras/initializers/initializers_v2.py:120: UserWarning: The initializer GlorotUniform is unseeded and being called multiple times, which will return identical values each time (even if the initializer is unseeded). Please update your code to provide a seed to the initializer, or avoid using the same initalizer instance more than once.

warnings.warn(

에포크 0 .......................................................................................................................................................................................................................................................................................................................................................................................

에포크 1 .......................................................................................................................................................................................................................................................................................................................................................................................

에포크 2 .......................................................................................................................................................................................................................................................................................................................................................................................

에포크 3 .......................................................................................................................................................................................................................................................................................................................................................................................

에포크 4 .......................................................................................................................................................................................................................................................................................................................................................................................

에포크 5 .......................................................................................................................................................................................................................................................................................................................................................................................

에포크 6 .......................................................................................................................................................................................................................................................................................................................................................................................

에포크 7 .......................................................................................................................................................................................................................................................................................................................................................................................

에포크 8 .......................................................................................................................................................................................................................................................................................................................................................................................

에포크 9 .......................................................................................................................................................................................................................................................................................................................................................................................

에포크 10 .......................................................................................................................................................................................................................................................................................................................................................................................

에포크 11 .......................................................................................................................................................................................................................................................................................................................................................................................

에포크 12 .......................................................................................................................................................................................................................................................................................................................................................................................

에포크 13 .......................................................................................................................................................................................................................................................................................................................................................................................

에포크 14 .......................................................................................................................................................................................................................................................................................................................................................................................

에포크 15 .......................................................................................................................................................................................................................................................................................................................................................................................

에포크 16 .......................................................................................................................................................................................................................................................................................................................................................................................

에포크 17 .......................................................................................................................................................................................................................................................................................................................................................................................

에포크 18 .......................................................................................................................................................................................................................................................................................................................................................................................

에포크 19 .......................................................................................................................................................................................................................................................................................................................................................................................

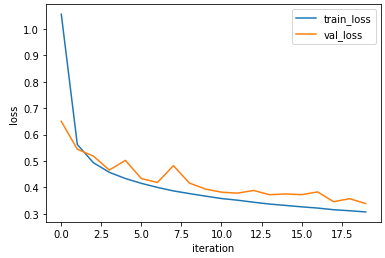

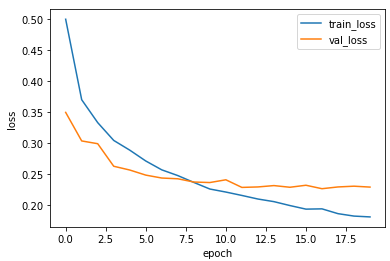

7. 도면 교육, 유효성 검사 손실 다이어그램, 유효성 검사 세트 정확도 확인

import matplotlib.pyplot as plt

plt.plot(cn.losses)

plt.plot(cn.val_losses)

plt.ylabel('loss')

plt.xlabel('iteration')

plt.legend(('train_loss', 'val_loss'))

plt.show()

cn.score(x_val, y_val_encoded)

##출력: 0.88325

8-5 Keras로 컨벌루션 신경망 구축하기

– Keras로 Convolutional Neural Network 구현

Keras의 컨볼루션 계층은 Conv2D 클래스에 속합니다.

최대 풀링은 MaxPooling2D 클래스입니다.

기능 맵을 평면화할 때 클래스 평면화

1. 필요한 클래스 가져오기

2. 컨볼루션 레이어 쌓기

3. 풀링 레이어 생성

4. 완전 연결 레이어에 삽입할 수 있도록 기능 맵을 펼칩니다.

5. 완전히 연결된 계층 구축

6. 모델 구조 검토

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Flatten, Dense

conv1 = tf.keras.Sequential()

conv1.add(Conv2D(10, (3, 3), activation='relu', padding='same', input_shape=(28, 28, 1)))

conv1.add(MaxPooling2D((2, 2)))

conv1.add(Flatten())

conv1.add(Dense(100, activation='relu'))

conv1.add(Dense(10, activation='softmax'))

conv1.summary()

##출력:

Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_1 (Conv2D) (None, 28, 28, 10) 100

max_pooling2d_1 (MaxPooling (None, 14, 14, 10) 0

2D)

flatten_1 (Flatten) (None, 1960) 0

dense_2 (Dense) (None, 100) 196100

dense_3 (Dense) (None, 10) 1010

=================================================================

Total params: 197,210

Trainable params: 197,210

Non-trainable params: 0

_________________________________________________________________– 컨볼루션 신경망을 위한 모델 훈련

1. 정확도 관찰을 위해 “정확도” 목록을 메트릭 매개변수에 전달합니다.

conv1.compile(optimizer="adam", loss="categorical_crossentropy", metrics=('accuracy'))

2. Adam 옵티마이저 사용 -> (적응 모멘트 추정)

history = conv1.fit(x_train, y_train_encoded, epochs=20,

validation_data=(x_val, y_val_encoded))

##출력:

Epoch 1/20

1500/1500 (==============================) - 30s 18ms/step - loss: 0.4541 - accuracy: 0.8419 - val_loss: 0.3870 - val_accuracy: 0.8583

Epoch 2/20

1500/1500 (==============================) - 25s 17ms/step - loss: 0.3140 - accuracy: 0.8869 - val_loss: 0.3006 - val_accuracy: 0.8932

Epoch 3/20

1500/1500 (==============================) - 29s 19ms/step - loss: 0.2710 - accuracy: 0.9015 - val_loss: 0.2760 - val_accuracy: 0.9014

Epoch 4/20

1500/1500 (==============================) - 25s 17ms/step - loss: 0.2367 - accuracy: 0.9126 - val_loss: 0.2634 - val_accuracy: 0.9066

Epoch 5/20

1500/1500 (==============================) - 25s 17ms/step - loss: 0.2115 - accuracy: 0.9201 - val_loss: 0.2582 - val_accuracy: 0.9098

Epoch 6/20

1500/1500 (==============================) - 26s 17ms/step - loss: 0.1908 - accuracy: 0.9302 - val_loss: 0.2454 - val_accuracy: 0.9137

Epoch 7/20

1500/1500 (==============================) - 25s 16ms/step - loss: 0.1708 - accuracy: 0.9369 - val_loss: 0.2535 - val_accuracy: 0.9108

Epoch 8/20

1500/1500 (==============================) - 27s 18ms/step - loss: 0.1528 - accuracy: 0.9445 - val_loss: 0.2502 - val_accuracy: 0.9163

Epoch 9/20

1500/1500 (==============================) - 25s 16ms/step - loss: 0.1354 - accuracy: 0.9506 - val_loss: 0.2585 - val_accuracy: 0.9172

Epoch 10/20

1500/1500 (==============================) - 26s 17ms/step - loss: 0.1214 - accuracy: 0.9549 - val_loss: 0.2568 - val_accuracy: 0.9177

Epoch 11/20

1500/1500 (==============================) - 25s 16ms/step - loss: 0.1084 - accuracy: 0.9600 - val_loss: 0.2679 - val_accuracy: 0.9189

Epoch 12/20

1500/1500 (==============================) - 24s 16ms/step - loss: 0.0971 - accuracy: 0.9647 - val_loss: 0.2785 - val_accuracy: 0.9168

Epoch 13/20

1500/1500 (==============================) - 24s 16ms/step - loss: 0.0846 - accuracy: 0.9699 - val_loss: 0.3072 - val_accuracy: 0.9125

Epoch 14/20

1500/1500 (==============================) - 25s 16ms/step - loss: 0.0766 - accuracy: 0.9721 - val_loss: 0.3149 - val_accuracy: 0.9144

Epoch 15/20

1500/1500 (==============================) - 24s 16ms/step - loss: 0.0690 - accuracy: 0.9754 - val_loss: 0.3250 - val_accuracy: 0.9155

Epoch 16/20

1500/1500 (==============================) - 25s 16ms/step - loss: 0.0610 - accuracy: 0.9786 - val_loss: 0.3486 - val_accuracy: 0.9119

Epoch 17/20

1500/1500 (==============================) - 25s 16ms/step - loss: 0.0551 - accuracy: 0.9807 - val_loss: 0.3716 - val_accuracy: 0.9091

Epoch 18/20

1500/1500 (==============================) - 25s 17ms/step - loss: 0.0487 - accuracy: 0.9827 - val_loss: 0.3686 - val_accuracy: 0.9158

Epoch 19/20

1500/1500 (==============================) - 26s 17ms/step - loss: 0.0427 - accuracy: 0.9846 - val_loss: 0.3842 - val_accuracy: 0.9128

Epoch 20/20

1500/1500 (==============================) - 25s 16ms/step - loss: 0.0414 - accuracy: 0.9850 - val_loss: 0.4082 - val_accuracy: 0.9156

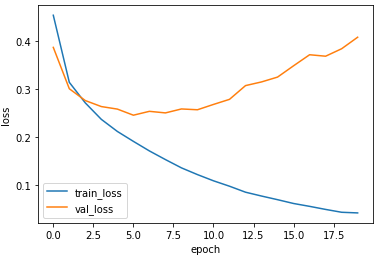

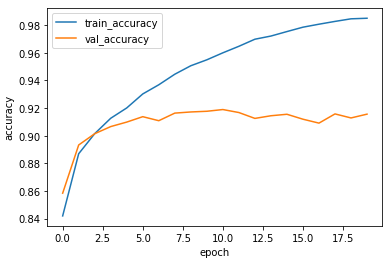

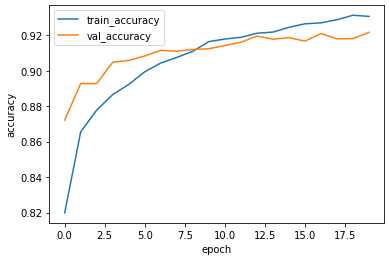

3. 손실 차트 및 정확도 차트 확인

plt.plot(history.history('loss'))

plt.plot(history.history('val_loss'))

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(('train_loss', 'val_loss'))

plt.show()

plt.plot(history.history('accuracy'))

plt.plot(history.history('val_accuracy'))

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(('train_accuracy', 'val_accuracy'))

plt.show()

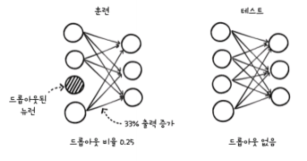

– 눈에 띄는

신경망에서 과적합을 줄이는 방법

일부 뉴런을 임의로 비활성화

TensorFlow에서 뉴런의 출력은 드롭아웃 비율만큼 증가합니다.

– 드롭아웃을 이용한 컨벌루션 신경망 구현

드롭아웃 클래스 추가

1. Keras에서 생성한 Convolutional Neural Network에 Dropout 적용

from tensorflow.keras.layers import Dropout

conv2 = tf.keras.Sequential()

conv2.add(Conv2D(10, (3, 3), activation='relu', padding='same', input_shape=(28, 28, 1)))

conv2.add(MaxPooling2D((2, 2)))

conv2.add(Flatten())

conv2.add(Dropout(0.5))

conv2.add(Dense(100, activation='relu'))

conv2.add(Dense(10, activation='softmax'))

2. 드롭아웃 레이어 확인

conv2.summary()

##출력:

Model: "sequential_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_2 (Conv2D) (None, 28, 28, 10) 100

max_pooling2d_2 (MaxPooling (None, 14, 14, 10) 0

2D)

flatten_2 (Flatten) (None, 1960) 0

dropout (Dropout) (None, 1960) 0

dense_4 (Dense) (None, 100) 196100

dense_5 (Dense) (None, 10) 1010

=================================================================

Total params: 197,210

Trainable params: 197,210

Non-trainable params: 0

_________________________________________________________________

3. 교육

conv2.compile(optimizer="adam", loss="categorical_crossentropy", metrics=('accuracy'))

history = conv2.fit(x_train, y_train_encoded, epochs=20, validation_data=(x_val, y_val_encoded))

##출력:

Epoch 1/20

1500/1500 (==============================) - 28s 18ms/step - loss: 0.5001 - accuracy: 0.8198 - val_loss: 0.3494 - val_accuracy: 0.8721

Epoch 2/20

1500/1500 (==============================) - 27s 18ms/step - loss: 0.3699 - accuracy: 0.8655 - val_loss: 0.3032 - val_accuracy: 0.8928

Epoch 3/20

1500/1500 (==============================) - 26s 17ms/step - loss: 0.3328 - accuracy: 0.8778 - val_loss: 0.2987 - val_accuracy: 0.8928

Epoch 4/20

1500/1500 (==============================) - 26s 17ms/step - loss: 0.3040 - accuracy: 0.8866 - val_loss: 0.2622 - val_accuracy: 0.9048

Epoch 5/20

1500/1500 (==============================) - 26s 17ms/step - loss: 0.2883 - accuracy: 0.8923 - val_loss: 0.2561 - val_accuracy: 0.9058

Epoch 6/20

1500/1500 (==============================) - 27s 18ms/step - loss: 0.2707 - accuracy: 0.8994 - val_loss: 0.2479 - val_accuracy: 0.9083

Epoch 7/20

1500/1500 (==============================) - 28s 19ms/step - loss: 0.2564 - accuracy: 0.9044 - val_loss: 0.2431 - val_accuracy: 0.9115

Epoch 8/20

1500/1500 (==============================) - 26s 17ms/step - loss: 0.2471 - accuracy: 0.9075 - val_loss: 0.2419 - val_accuracy: 0.9111

Epoch 9/20

1500/1500 (==============================) - 26s 17ms/step - loss: 0.2360 - accuracy: 0.9110 - val_loss: 0.2367 - val_accuracy: 0.9120

Epoch 10/20

1500/1500 (==============================) - 26s 17ms/step - loss: 0.2253 - accuracy: 0.9165 - val_loss: 0.2358 - val_accuracy: 0.9124

Epoch 11/20

1500/1500 (==============================) - 26s 17ms/step - loss: 0.2204 - accuracy: 0.9179 - val_loss: 0.2403 - val_accuracy: 0.9143

Epoch 12/20

1500/1500 (==============================) - 26s 17ms/step - loss: 0.2149 - accuracy: 0.9189 - val_loss: 0.2280 - val_accuracy: 0.9161

Epoch 13/20

1500/1500 (==============================) - 26s 17ms/step - loss: 0.2092 - accuracy: 0.9212 - val_loss: 0.2287 - val_accuracy: 0.9196

Epoch 14/20

1500/1500 (==============================) - 26s 17ms/step - loss: 0.2049 - accuracy: 0.9218 - val_loss: 0.2309 - val_accuracy: 0.9178

Epoch 15/20

1500/1500 (==============================) - 28s 18ms/step - loss: 0.1986 - accuracy: 0.9245 - val_loss: 0.2282 - val_accuracy: 0.9187

Epoch 16/20

1500/1500 (==============================) - 26s 17ms/step - loss: 0.1928 - accuracy: 0.9265 - val_loss: 0.2314 - val_accuracy: 0.9168

Epoch 17/20

1500/1500 (==============================) - 25s 17ms/step - loss: 0.1932 - accuracy: 0.9270 - val_loss: 0.2259 - val_accuracy: 0.9210

Epoch 18/20

1500/1500 (==============================) - 26s 18ms/step - loss: 0.1855 - accuracy: 0.9288 - val_loss: 0.2287 - val_accuracy: 0.9180

Epoch 19/20

1500/1500 (==============================) - 26s 17ms/step - loss: 0.1815 - accuracy: 0.9313 - val_loss: 0.2299 - val_accuracy: 0.9182

Epoch 20/20

1500/1500 (==============================) - 26s 17ms/step - loss: 0.1802 - accuracy: 0.9307 - val_loss: 0.2285 - val_accuracy: 0.9217

4. 손실 및 정확도 플롯 그리기

plt.plot(history.history('loss'))

plt.plot(history.history('val_loss'))

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(('train_loss', 'val_loss'))

plt.show()

plt.plot(history.history('accuracy'))

plt.plot(history.history('val_accuracy'))

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(('train_accuracy', 'val_accuracy'))

plt.show()

검증 손실이 증가하는 에포크가 지연되고 훈련 손실 갭이 좁아집니다.

향상된 정확도

-> 분류 문제에서 정확도를 직접 최적화할 수는 없지만 교차 엔트로피 손실 함수를 최적화할 수 있습니다.

loss, accuracy = conv2.evaluate(x_val, y_val_encoded, verbose=0)

print(accuracy)

##출력: 0.92166668176651

※ 내용